(Article source: The verge, compilation: Chen Jia, part of the content comes from “Knowledge is Power” magazine, “Go and Artificial Intelligence” author: Shi Jun, reviewer: Dr. Li Changliang, Institute of Automation, Chinese Academy of Sciences, original article, reprinted, please indicate that it comes from the popular science China WeChat public account)

guide

Today, South Korea’s top nine-dan Go player Lee Sedol took on the challenge of Alpha Go, an artificial intelligence system developed by Google’s Deep Mind team, in Seoul, South Korea. Both sides have publicly stated that they are convinced that they will win the ultimate victory. In the end, it is hard to say who will win or lose. For the onlookers who “say they don’t understand you AI fans/Go fans very well”, how should they understand this tall duel?

1. “Deep Blue” VK Artificial Intelligence Alpha Go

Speaking of which, this is not the first time artificial intelligence has played chess against humans. Back in 1997, a supercomputer called “Deep Blue” beat chess champion Garry Kasparov in a game of chess. “Deep Blue” is a supercomputer. Although it seems to be different from the artificial intelligence program Alpha Go, in fact, the artificial intelligence program also needs a computer as a carrier to work, just like the brain needs a body. So it’s not surprising to compare them side by side. With the continuous development of supercomputer technology, even the performance of the most common integrated graphics card today exceeds 700GFLOPS. “Dark Blue” has gone from gradually being unable to keep up with the pace to being thrown away by a large distance. It is worth mentioning that the world’s fastest supercomputer is our country’s Tianhe-2, and its performance has reached 33.86PFLOPS, which is 300,000 times that of the “Deep Blue” computer.

So how does Google’s Alpha Go perform compared to Deep Blue? Previously, Google had published an article on this artificial intelligence system in the “Nature” magazine, which stated that the Alpha Go artificial intelligence computer is equipped with 48 CPUs and 8 GPUs. We seem to be unable to put the two together for a direct comparison, because Alpha Go runs on a cloud computing platform, and we can use the computer data of competitors to make a rough comparison, such as Alibaba Cloud.

In December 2015, Alibaba Cloud opened high-performance computing services to the outside world. The single-machine floating-point computing power of these computers is 11TFLOPS. If Google’s computer performance is close to that of Alibaba Cloud, then the performance of the hardware driven by Alpha Go is at least 1,000 times that of Deep Blue.

2. Man-machine battle, why did you choose Go this time?

Speaking of Go and artificial intelligence, we may know more or less, but how are the two connected?

That’s because the key to machines defeating humans is Go. Go has a long history and highly reflects human wisdom. The Go board has 19 vertical and horizontal lines and 361 intersections. The number of possible situations involved can reach up to 3^361, and the approximate volume is 10^170. In the universe that has been observed, the number of atoms is only 10^80. The maximum number of chess situations is only 2^155. What is this concept? The seemingly simple 19 vertical and horizontal paths and 361 intersections form a vast universe. That’s why some people say that Go is the best game to embody human wisdom. Some people predicted before that artificial intelligence (AI) will take more than ten years to defeat human beings. Therefore, if commercial elements are thrown away, the significance of this game may be to witness history, which is enough to show that the current technical research on artificial intelligence has reached a new height.

3. How difficult is it for AI to play Go?

Difficulty 1: The basis of a Go game is the confirmation of life and death. It is the most basic to determine the life and death of a piece of chess on the board, but it is very difficult to figure out whether a piece of chess is dead or alive. Moreover, this seemingly dead or alive state is constantly changing. Researching local life-and-death search is a difficult point in the development of AI Go.

Difficulty 2: Chess shape embodies the unique characteristics of human image thinking. The feeling of chess players depends entirely on their own experience, and this feeling is the key to winning or losing, and it is also a sign of the player’s level. Human chess players don’t want to waste their pieces to attack the opponent’s live chess shape needlessly or try to save their own dead chess shape. It is an important issue for artificial intelligence to give AI this feeling of chess shape.

Difficulty 3: In addition, it is necessary to study special algorithms to solve the problem of fast recognition. The famous computer Go program designer Boone said: “With a fast pattern recognition method, it is not difficult to teach the program to use Tesuji to play chess.” Therefore, the pattern recognition algorithm is an important part of the Go game program, and the efficient pattern recognition algorithm reflects the level of the game program.

To put it simply, on the one hand, the rules of Go are very complicated, and every step of adjustment will generate more possibilities, which requires a high computing power of the machine. On the other hand, even if there are enough existing Go game data stored in the machine, due to the complexity of the Go rules and the unpredictable moves of the players, there are higher requirements for the machine’s adaptability or deep learning ability.

4. Playing Go is so complicated, how does AI win?

It is so difficult as mentioned above, does the AI have no chance to win? NO NO NO~

In order to defeat humans, researchers have come up with big killers—this is the new generation of machine learning in the field of artificial intelligence—deep learning and reinforcement learning.

In other words, the concept of deep learning is to let the computer learn and think like the human brain. Different from traditional machine learning, deep learning combines computer science and human neurology to allow computers to learn autonomously. For example, instead of a human telling a computer that it’s a cat, and letting it identify and verify it. Instead, provide the computer with a large amount of picture data, let it learn and analyze by itself, and then form the concept of “cat” autonomously, just like the visual cortex of the human brain responds. With an artificial intelligence system with deep learning capabilities, when driving in the future, the car can actively remind you of the road conditions around you, and it can also be applied to voice and face recognition, medical diagnosis and other fields.

The term reinforcement learning comes from behavioral psychology, which views behavioral learning as a trial-and-error process that maps dynamic environmental states into corresponding actions. It is similar to the traditional experience of “eating a ditch and gaining one’s wisdom”. Reinforcement learning can make strategic choices and is widely used in the categories of chess and maze. This kind of unsupervised or semi-supervised machine learning called “deep learning”, which relies on big data and powerful computing power, enables computers to complete tasks that could only be done by highly specialized professionals without human participation, even surpassing experts.

In addition, in order to achieve higher computing power, Google also connected Alpha Go to a network composed of 1202 CPUs. This makes the computing power of this artificial intelligence system increase by 24 times on the original basis. After calculation, the performance of Alpha Go is about 25,000 times that of Deep Blue Computer. Calculated at this pace, if humans can play 1,000 games a year, AI can play 1 million games a day. So as long as AlphaGo has undergone enough training, it is still possible to beat human players. After all, humans may get tired and make mistakes due to physical and psychological limitations after playing chess for a long time, but machines will not.

However, Google Chairman Schmidt said that even if the machines do win, humans will still win. Even if the AlphaGo machine wins Lee Sedol in the end, there are still doubts about whether artificial intelligence will “crush” humans. We don’t need to be too anxious, artificial intelligence may still have a long way to go.

5. The man-machine war in history

01

Deep Blue defeats Kasparov

In 1997, Deep Blue, the “Deep Blue” supercomputer of IBM Corporation of the United States, defeated Kasparov, the world’s number one chess master at that time, with a record of two wins, one loss and three draws, and became the winner.

02

Inspur Tiansuo challenges human chess masters

In 2006, the Inspur Tiansuo supercomputer challenged the human chess master. In the final peak duel, Xu Yinchuan and Inspur Tiansuo drew both games. Inspur Tiansuo proved to the world its super computing power.

03

All-rounder Watson challenges humans

In 2011, “Deep Blue”‘s fellow student “Watson” (Watson) challenged two human champions in the old American quiz show “Jeopardy” and succeeded.

04

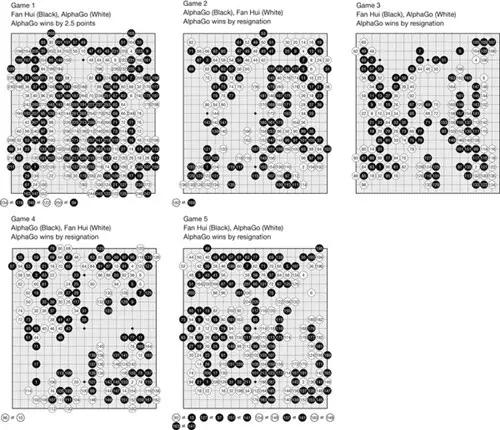

Alpha Go defeated European champion Fan Hui 5-0

In January 2016, the Go computer software “AlphaGo” (AlphaGo) developed by the artificial intelligence (AI) developer “DeepMind” (located in the UK) under Google of the United States defeated the professional Go player Fan Hui, setting a global precedent.

After entering the 21st century, scientific research has changed from big data, artificial intelligence to virtual reality, from the discovery of earth-like planets, gravitational waves to unmanned driving, and quantum computing. This is an era of constant innovation and surprises, and we are fortunate to participate in it. This may be more interesting than simply discussing the winners and losers of the game.